(And why we made one our PR person for SXSW.)

Earlier this month, we launched a new Twitter account, The PR Human (@thePRhuman). Despite the handle (and its proper name, Very Good PR Person Who Is A Real Human), it’s actually the curated output of a recurrent neural network — a type of machine learning tool.

Okay, sounds nifty. But what does that mean in practice?

Long story short, we took a pile of data – tech news stories, tweets tagged with #SXSW, press releases – and gave it to an intelligent computer program that can learn from what it sees.

“Look,” we told it, “this is what tech hype looks like. Study it closely. Got it? OK, now make some of your own.”

And it did.

The Software Startup Announces the Robots

— Very Good PR Person Who Is A Real Human (@thePRhuman) February 12, 2018

A New Report: The Windors Are the Bett Over Obbula

— Very Good PR Person Who Is A Real Human (@thePRhuman) February 12, 2018

A Recent Tax Banker: The Tech Technology

— Very Good PR Person Who Is A Real Human (@thePRhuman) February 13, 2018

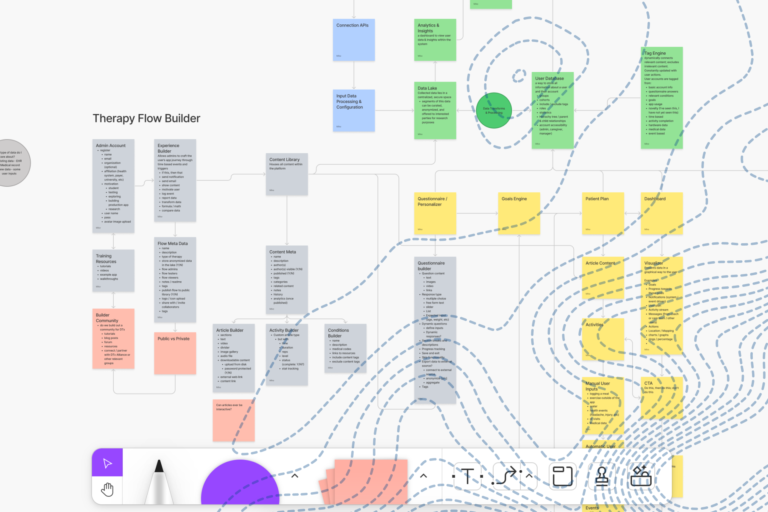

How it works

First, the big picture. One of the main things machine learning systems do is scrutinize complex data and identify patterns. That’s how machine learning is being used to diagnose pneumonia and heart disease, predict train delays, and even create new Magic: The Gathering cards.

Getting more specific to our project, a recurrent neural network is a specific type of machine learning systems that’s modeled after the human brain. (Feeling sci-fi yet?) Just as your brain is a collection of individual neurons, a neural network is a group of interconnected nodes that work in unison to process and interpret data.

And just like a fresh human brain, it doesn’t do a whole lot out of the box – it needs to be trained, and it needs to learn.

There are different ways of approaching this challenge. In our case, we’re relying on a concept called supervised learning, where you hand-select data sets that look like the output you want and give them to the neural network as study materials.

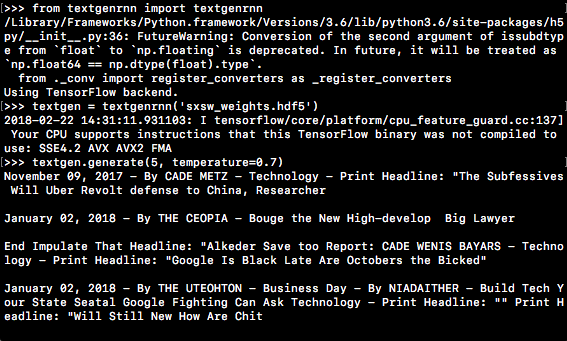

Before we could start ‘feeding’ our neural network, we had to get it set up. The PR Human runs on two free tools – Google’s Tensorflow machine learning framework and a free Python program called textgenrnn (which you can download here).

Both of these tools are available through Python’s package manager, which means it doesn’t take much more than a passing familiarity with programming languages to get it up and running (more on that later).

Back to the training side of the equation. For @thePRhuman, we wanted the output to look like tweets and news stories from a tech news blog, so that’s where we started.

Here’s an example of what our training file looks like (you’ll notice it’s quite messy):

Hacker group failverflow shared a photo of a Nintendo Switch running

Debian, a distribution of Linux

Hacker group manages to run Linux on a Nintendo Switch | Hacker group failverflow shared a photo of a Nintendo Switch running Debian, a distribution of Linux (via Nintendo Life). The group claims that …

The only person who comes out of this trial looking like a winner is @Uber’s new chief executive Dara Khosrowshahi

Apple adds live news channels to TV app for iOS and Apple TV

Apple adds live news channels to TV app for iOS and Apple TV |…After teasing the feature back in September, Apple is adding support for live news in the TV app on the iPhone, iPad and Apple TV — tomac first …

If neural networks were truly intelligent (in the sci-fi sense of the term), you could take a “fire and forget” approach to their education. In reality, the trick is to get the network to learn what you want it to learn. That means it’s important to check in periodically and adjust the training data as needed.

In our case, the early training data was largely tweet-based. (We wanted to make tweets, so it was a rational place to start.)

Unfortunately, that meant the output looked like this:

@ @BiTCons

SpaceX #Sholkophon #fildalkHouldro

told some problems and opening in data joining Secret, and 2015, which has been cauding to time these both stadio and fundraces by #SXSWPMike

New Hack at #SXSW #SXSW | HBO Resons

So what’s going on here? Short answer, our neural network learned the quirks of tweets a little too well. You can see where it’s picked up on specific things – @names, hashtags, specific companies like SpaceX and HBO – but the syntax is all wrong.

To correct for this, we gave it more data that was in proper sentence format: headlines, article synopses, and press releases. In other words, data that would better teach it what an English sentence should look like. Then we gave it some time to think and checked in again.

This time around, it was a lot closer to what we wanted:

The reporting another raised $30 as of the first for in including school film.

Facebook and students reported on Senalmorious http://tcrn.ch/Esxl X Fronsin Festival said the proversaw, businesses to make but it is a conference with educator has already to really announce the c

#SXSW But Sad is former the more deal data early directly.)

By the way – those nonsense words like Senalmorious, Fronsin, and proversaw? Those aren’t words it’s seen before, but rather the network’s interpretation of what a new word might look like. You can adjust the “creativity” of neural networks, indicating how conservative to be with the output.

It’s a balancing act. Too little creativity, and you get stuff like looks like this:

A startup to see the company to see the company to be and a startup to see the company that its beat a star for the company that could be a startup and the company to see the company

Too much and it’s nothing but senalmorious, fronsin, proversaw, and so on:

Pre-Eudout Alprior has fox loces e-Drcips– Ubd overi rypabrns scenito Inc.”

This is more or less the same process that’s used to train neural networks to diagnose illness (albeit a lot sloppier). Only instead of tweets, you’d be giving it sets of radiology imagery and diagnosis codes, then giving it a new image and asking it to “guess” what the code should be.

Psst. Want to learn more about machine learning in healthcare? Sign up for our free HealthWire newsletter.

We can do something similar with @thePRhuman, too, asking it to start a sentence off with “Arcweb is” and getting something like “Arcweb is algered to help recent the partnership scare as the economic technology on him.”

(We should reiterate that neural networks have no concept of radiology, diagnoses, tweets, humor, or anything else. All they “know” is how one set of data relates to another set of data.)

The neural network that creates @thePRhuman’s content could be a lot better – the signal to noise ratio hovers somewhere around 50% currently, and it’s learned a lot of bad lessons. (Due to the structure of some of our data, it produces a lot of output formatted like “June 28, 2017 – By JACK – Technology – Print Headline: “The Banker Startup Startup Festival Start-Offended.”) That means we throw out a lot of junk to get to the good stuff – hence, “curated.”

All of these problems could be solved with more and better data and more time for training. But for our purposes (making funny tweets), it’s good enough.

Why we did this

Okay, so our neural network makes funny text. But what’s the point? This isn’t the fanciest machine learning application, no doubt. It’s not even the first one Arcweb has done.

Here’s the big takeaway: when we built our Traveler machine learning app, it was something our engineers built. Not so with The PR Human.

In fact, no new development went into it. Not a line of code was written (by us, anyway – enormous credit needs to go to Max Woolf, creator of textgenrnn, and to Google for making Tensorflow open source and free-to-use). All the work was in gathering the correct data and training the system.

And therein lies the point. All this was possible because machine learning is becoming a platform. It’s not sci-fi any longer – it’s just another business tool. That means it’s no longer something that might possibly be used a decade from now, because it’s something that companies can use right now.

There are already commercial solutions for machine learning that take most of the tech legwork out of it, the same way Amazon’s AWS platform takes the legwork out of hosting or Salesforce takes the legwork out of building a CRM. (In fact, Amazon now offers its own machine learning platform, Amazon SageMaker.)

If you work in healthcare, there are companies with machine learning image recognition platforms. If you’re in finance, you can adopt a platform that’s already trained to detect certain types of fraud. And on and on and on – whether you’re looking for an out-of-the-box machine learning tool or need a platform to tinker on, machine learning is market-ready.

By the way, none of this is to suggest that machine learning has reached its endpoint. The industry is in its infancy, and there will probably be a rapid proliferation of platforms and products over the next few years.

But if you do work that involves taking a big mountain of data and putting it in context – well, that’s what machine learning is really good at. And if it’s not an area you’re exploring yet, it should definitely be high up on your to-do list.

Because in the not-too-distant future, it’s just going to be another fact of doing business.

Building a digital product?

Want more wacky machine learning tweets? Follow @thePRhuman on Twitter.

Interested in machine learning and other emerging technologies? Sign up for our Product Hacker and HealthWire newsletters for info on blockchain, machine learning, voice interfaces, and more.

Extra special thanks to Max Woolf, author of textgenrnn.